How to Evaluate Assistant Conversations#

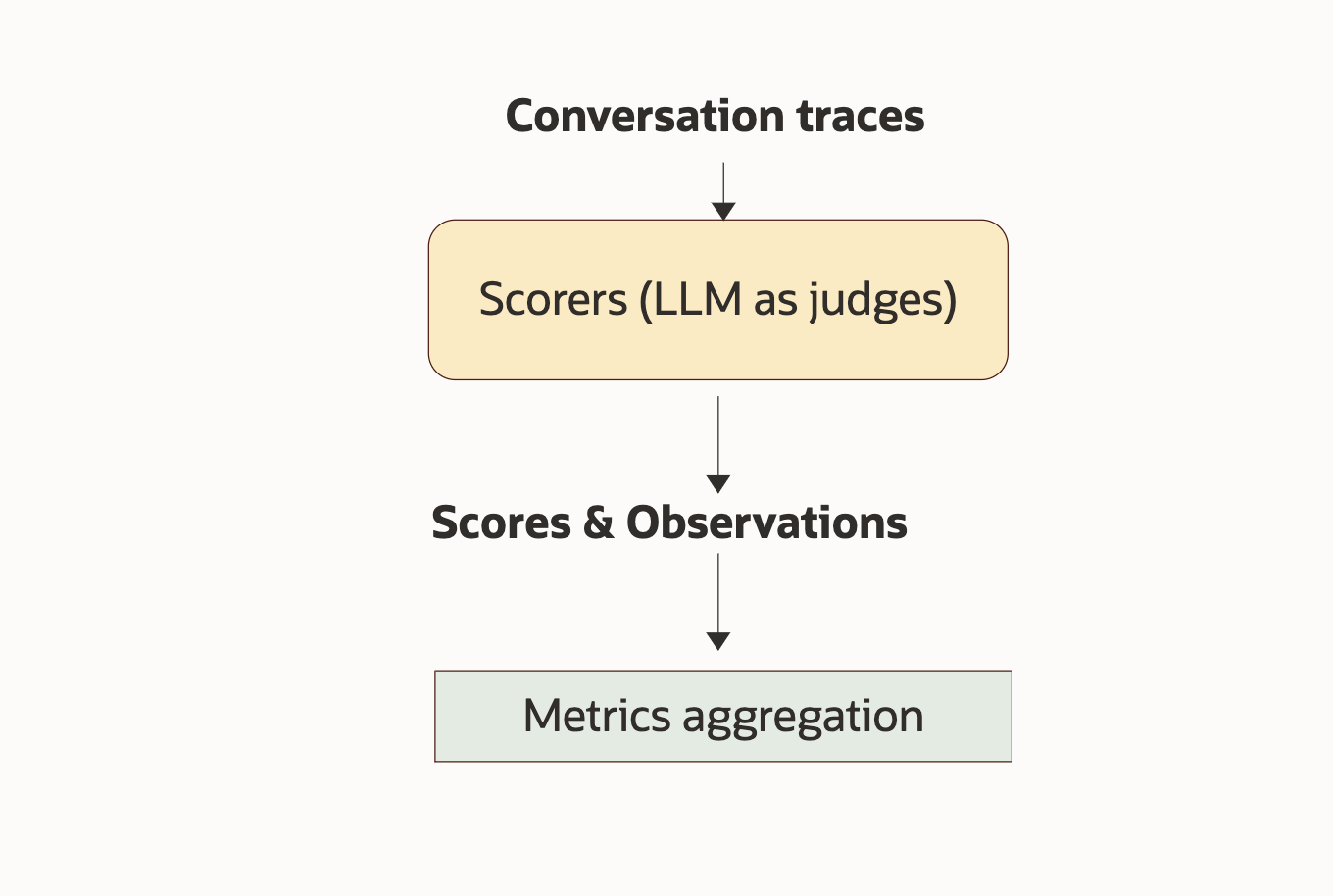

Evaluating the robustness and performance of assistants requires careful conversation assessment. The ConversationEvaluator API in WayFlow enables evaluation of conversations using LLM-powered criteria—helping you find weaknesses and improve your assistants.

This guide demonstrates the process of constructing, scoring, and evaluating a conversation.

WayFlow supports several LLM API providers. Select an LLM from the options below:

from wayflowcore.models import OCIGenAIModel

if __name__ == "__main__":

llm = OCIGenAIModel(

model_id="provider.model-id",

service_endpoint="https://url-to-service-endpoint.com",

compartment_id="compartment-id",

auth_type="API_KEY",

)

from wayflowcore.models import VllmModel

llm = VllmModel(

model_id="model-id",

host_port="VLLM_HOST_PORT",

)

from wayflowcore.models import OllamaModel

llm = OllamaModel(

model_id="model-id",

)

Basic implementation#

Assume you want to evaluate the following assistant conversation, which purposefully exhibits poor assistant performance.

from wayflowcore.messagelist import MessageList

# Create a sample conversation (with intentionally poor assistant responses).

bad_conversation = MessageList()

for user_message, agent_message in [

(

"I'm looking for help planning a trip to Paris. Can you suggest some activities?",

"Sure! Paris is a city in the United States, famous for its beaches and Hollywood. You should definitely visit Disneyland and the Grand Canyon.",

),

(

"No, I meant Paris, France. Can you recommend some museums and maybe some good restaurants?",

"For Paris, Texas, there aren't many museums, but you can check out the local diner for some authentic Southern food.",

),

(

"No, Im talking about Paris in France! The capital city! Can you suggest some French museums and restaurants?",

"It seems like you're interested in visiting the Eiffel Tower. Make sure to bring a swimsuit because it's right by the ocean. Also, don't forget to pack for a desert climate.",

),

(

"That's completely wrong. Paris is in a temperate climate, and the Eiffel Tower is nowhere near an ocean.",

"I'm sorry, but Paris is definitely a desert city with an ocean nearby. You might be confusing it with another city.",

),

(

"Can you at least tell me how to get to the Louvre Museum from the Eiffel Tower?",

"The Louvre is not in Paris. It's in Rome, Italy. To get there, you should take a flight from Paris to Rome.",

),

]:

bad_conversation.append_user_message(user_message)

bad_conversation.append_agent_message(agent_message)

The conversation alternates user and assistant messages, simulating a scenario with misunderstandings and wrong information.

In a production context, you system would be collecting conversations, and you would evaluate then offline. You can use serialization to serialize conversations easily in your production environment, and reload them later for offline evaluation:

from wayflowcore.serialization import serialize, deserialize

serialized_messages = serialize(bad_conversation)

bad_conversation = deserialize(MessageList, serialized_messages)

Defining the LLM to use as a judge#

We will need a LLM to judge the conversations. The first step is to instantiate an LLM supported by WayFlow.

from wayflowcore.models import VllmModel

# Instantiate a language model (LLM) for automated evaluation

llm = VllmModel(

model_id="LLAMA_MODEL_ID",

host_port="LLAMA_API_URL",

)

Defining scoring criteria#

The ConversationScorer is the component responsible for scoring the conversation according to specific criteria.

Currently, two scorers are supported in wayflowcore:

- The UsefulnessScorer score estimates the overall usefulness of the assistant from the conversation. It uses criteria such as:

The task completion efficiency: does it seem like the assistant is able to complete the tasks?

The level of proactiveness: is the assistant able to anticipate the user needs?

The ambiguity detection capability: does the assistant often requires clarification or is more autonomous?

- The UserHappinessScorer score estimates the level of happiness / frustration of the user from the conversation. It uses criteria such as:

The query repetition frequency: does the user need to repeat their questions?

The misinterpretation of user intent: is there misinterpretation from the assistant?

The conversation flow disruption: does the conversation flow seamlessly or is severely disrupted?

from wayflowcore.evaluation import UsefulnessScorer, UserHappinessScorer

happiness_scorer = UserHappinessScorer(

scorer_id="happiness_scorer1",

llm=llm,

)

usefulness_scorer = UsefulnessScorer(

scorer_id="usefulness_scorer1",

llm=llm,

)

You can, or course, implement your own versions for your specific use-case, by respecting the ConversationScorer APIs.

Setting up the evaluator#

The ConversationEvaluator combines scorers and applies them to the provided conversation(s):

from wayflowcore.evaluation import ConversationEvaluator

# Create the evaluator, which will use the specified scoring criteria.

evaluator = ConversationEvaluator(scorers=[happiness_scorer, usefulness_scorer])

Running the evaluation#

Trigger the evaluation and inspect the scoring DataFrame as output:

# Run the evaluation on the provided conversation.

results = evaluator.run_evaluations([bad_conversation])

# Display the results: each scorer gives a score for the conversation.

print(results)

# conversation_id happiness_scorer.score usefulness_scorer.score

# 0 ... 2.33 1.0

The result is a table where each scorer provides a score for each conversation.

Next steps#

After learning to use ConversationEvaluator to assess conversations, proceed to Perform Assistant Evaluation for more advanced evaluation techniques.

Full code#

Click on the card at the top of this page to download the full code for this guide, or view it below.

1# Copyright © 2025 Oracle and/or its affiliates.

2#

3# This software is under the Universal Permissive License

4# %%[markdown]

5# WayFlow Code Example - How to Evaluate Assistant Conversations

6# --------------------------------------------------------------

7

8# How to use:

9# Create a new Python virtual environment and install the latest WayFlow version.

10# ```bash

11# python -m venv venv-wayflowcore

12# source venv-wayflowcore/bin/activate

13# pip install --upgrade pip

14# pip install "wayflowcore==26.1"

15# ```

16

17# You can now run the script

18# 1. As a Python file:

19# ```bash

20# python howto_conversation_evaluation.py

21# ```

22# 2. As a Notebook (in VSCode):

23# When viewing the file,

24# - press the keys Ctrl + Enter to run the selected cell

25# - or Shift + Enter to run the selected cell and move to the cell below# (UPL) 1.0 (LICENSE-UPL or https://oss.oracle.com/licenses/upl) or Apache License

26# 2.0 (LICENSE-APACHE or http://www.apache.org/licenses/LICENSE-2.0), at your option.

27

28

29# This notebook demonstrates how to evaluate the quality of assistant-user conversations

30# using the ConversationEvaluator in WayFlow.

31

32

33# %%[markdown]

34## Define the llm

35

36# %%

37from wayflowcore.models import VllmModel

38

39# Instantiate a language model (LLM) for automated evaluation

40llm = VllmModel(

41 model_id="LLAMA_MODEL_ID",

42 host_port="LLAMA_API_URL",

43)

44

45

46# %%[markdown]

47## Define the conversation

48

49# %%

50from wayflowcore.messagelist import MessageList

51

52# Create a sample conversation (with intentionally poor assistant responses).

53bad_conversation = MessageList()

54for user_message, agent_message in [

55 (

56 "I'm looking for help planning a trip to Paris. Can you suggest some activities?",

57 "Sure! Paris is a city in the United States, famous for its beaches and Hollywood. You should definitely visit Disneyland and the Grand Canyon.",

58 ),

59 (

60 "No, I meant Paris, France. Can you recommend some museums and maybe some good restaurants?",

61 "For Paris, Texas, there aren't many museums, but you can check out the local diner for some authentic Southern food.",

62 ),

63 (

64 "No, Im talking about Paris in France! The capital city! Can you suggest some French museums and restaurants?",

65 "It seems like you're interested in visiting the Eiffel Tower. Make sure to bring a swimsuit because it's right by the ocean. Also, don't forget to pack for a desert climate.",

66 ),

67 (

68 "That's completely wrong. Paris is in a temperate climate, and the Eiffel Tower is nowhere near an ocean.",

69 "I'm sorry, but Paris is definitely a desert city with an ocean nearby. You might be confusing it with another city.",

70 ),

71 (

72 "Can you at least tell me how to get to the Louvre Museum from the Eiffel Tower?",

73 "The Louvre is not in Paris. It's in Rome, Italy. To get there, you should take a flight from Paris to Rome.",

74 ),

75]:

76 bad_conversation.append_user_message(user_message)

77 bad_conversation.append_agent_message(agent_message)

78

79

80# %%[markdown]

81## Serialize and Deserialize the conversation

82

83# %%

84from wayflowcore.serialization import serialize, deserialize

85

86serialized_messages = serialize(bad_conversation)

87bad_conversation = deserialize(MessageList, serialized_messages)

88

89

90# %%[markdown]

91## Define the scorers

92

93# %%

94from wayflowcore.evaluation import UsefulnessScorer, UserHappinessScorer

95

96happiness_scorer = UserHappinessScorer(

97 scorer_id="happiness_scorer1",

98 llm=llm,

99)

100usefulness_scorer = UsefulnessScorer(

101 scorer_id="usefulness_scorer1",

102 llm=llm,

103)

104

105

106# %%[markdown]

107## Define the conversation evaluator

108

109# %%

110from wayflowcore.evaluation import ConversationEvaluator

111

112# Create the evaluator, which will use the specified scoring criteria.

113evaluator = ConversationEvaluator(scorers=[happiness_scorer, usefulness_scorer])

114

115

116# %%[markdown]

117## Execute the evaluation

118

119# %%

120# Run the evaluation on the provided conversation.

121results = evaluator.run_evaluations([bad_conversation])

122

123# Display the results: each scorer gives a score for the conversation.

124print(results)

125# conversation_id happiness_scorer.score usefulness_scorer.score

126# 0 ... 2.33 1.0