Export as MCP Langchain server

Details

Introduction to the MCP Server for a tested AI Optimizer & Toolkit configuration

This document describes how to re-use the configuration tested in the AI Optimizer and expose it as an MCP tool. The MCP tool can be consumed locally by Claude Desktop or deployed as a remote MCP server using the Python/LangChain framework.

This early-stage implementation supports communication via the stdio and sse transports, enabling interaction between the agent dashboard (for example, Claude Desktop) and the exported RAG tool.

NOTICE

At present, only configurations using Ollama or OpenAI for both chat and embedding models are supported. Broader provider support will be added in future releases.

Export config

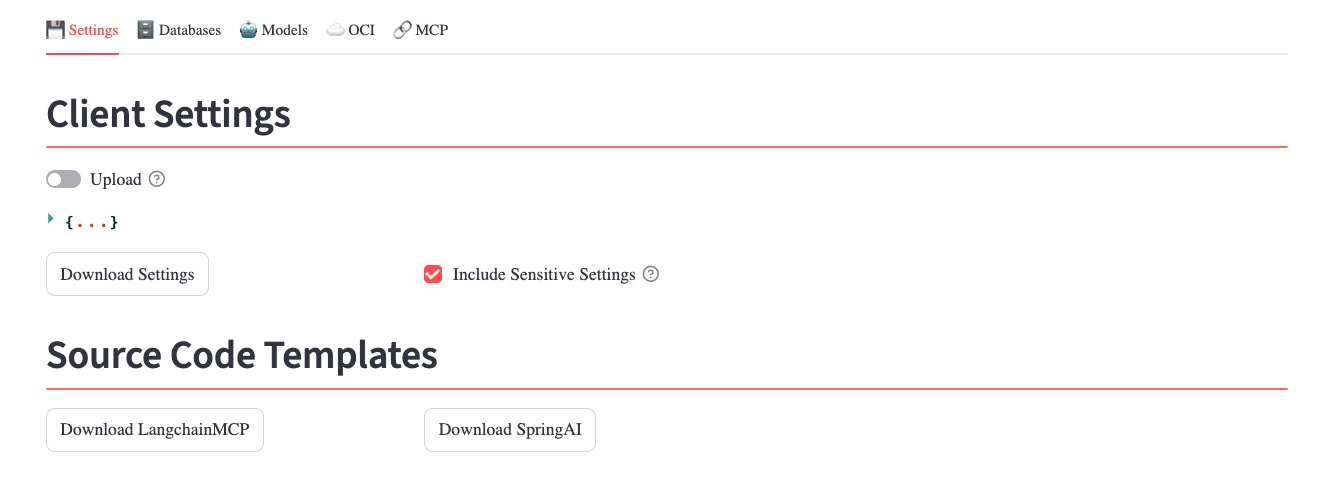

After testing a configuration in the AI Optimizer web interface, navigate to: Settings/Client Settings:

If—and ONLY if—Ollama or OpenAI are selected as providers for both chat and embedding models:

- select

Include Sensitive Settingscheckbox - Click

Download LangchainMCPto download a zip file containing a complete project template based on the currently selected configuration. - Extract the archive into a directory referred to in this document as <PROJECT_DIR>.

To run the exported project, follow the steps in the sections below.

NOTICE

- if you plan to run the application on a different hos, update

optimizer_settings.jsonto reflect non-local resources such as LLM endpoints, database hosts, wallet directories, and similar settings. - if the

Download LangchainMCPbutton is not visible, verify that Ollama or OpenAI is selected for both the chat model and the embedding model.

Pre-requisites.

The following software is required:

- Node.js: v20.17.0+

- npx/npm: v11.2.0+

- uv: v0.7.10+

- Claude Desktop free

Setup

With uv installed, run the following commands FROM <PROJECT_DIR>:

Standalone client

A standalone client is included to allow command-line testing without an MCP client.

From <PROJECT_DIR>, run:

This is useful for validating the configuration before deploying the MCP server.

Run the RAG Tool by a remote MCP server

Open rag_base_optimizer_config_mcp.py and verify the MCP server initialization.

- For a

Remote client, ensure the configuration matches the following:

- Next, verify or update the transport configuration:

- Start the MCP server in a separate shell:

Quick test via MCP “inspector”

- Start the MCP inspector:

- Open the generated URL, for example:

Configure the inspector as follows:

- Transport Type:

Streamable HTTP - URL:

http://localhost:9090/mcp

- Transport Type:

Test the exported RAG tool.

Claude Desktop setup

The free version of Claude Desktop does not natively support remote MCP servers. For testing purposes, this limitation can be addressed using the mcp-remote proxy.

In Claude Desktop, navigate to

Settings → Developer → Edit Config. Edit theclaude_desktop_config.jsonto add a reference to the remote MCP server instreamable-http:Next, go to

Settings → General → Claude Settings → Configure → Profile, and update the fields such as:Full NameWhat should we call you

and so on, putting in

What personal preferences should Claude consider in responses?the following text:This forces the use of

rag_toolfor all relevant queries.Restart Claude Desktop.

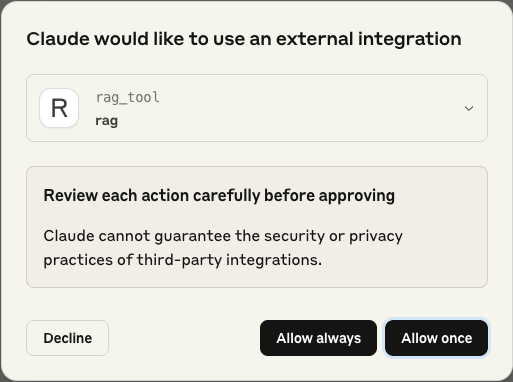

You may initially see warnings related to the

rag_toolconfiguration. These warnings are expected and do not prevent tool activation.When starting a conversation, Claude Desktop will prompt you to authorize the use of the

ragtool:

If the question matches content stored in the vector store, the response will be grounded in that knowledge base. Otherwise, the LLM will fall back to its general training or other configured tools.

Notice

IIf you encounter issues during startup, inspect the logs for compatibility problems related to older Node.js or npx versions used by the mcp-remote library.

Check installed Node.js versions with:

If multiple versions are present, Claude Desktop may default to an older one. Removing outdated versions and keeping only Node.js v20.17.0 or later can resolve these issues. Restart the MCP server and Claude Desktop, then test again.